/ Wan 2.1 vs Sora: A Comprehensive Comparison

By Wan 2.1

07 Mar 2025

12

23423

As AI technology advances, video generation is emerging as a frontier with enormous potential. Leading this shift are two incredible models: Wan 2.1, Alibaba’s open-source innovation, and Sora, OpenAI’s closed-source powerhouse. Each brings unique capabilities and philosophies to the fore.

Text-to-Video (T2V) technology translates text descriptions into compelling video narratives through a three-step process: understanding text, creating visuals, and generating coherent motion sequences.

Image-to-Video (I2V) begins with a static image, converting it into a dynamic scene by adding movement while filling in the missing details using extensive knowledge of anatomy and physics.

Despite remarkable progress, challenges remain: ensuring time consistency, adhering to the laws of physics, preserving details, maintaining narrative coherence, and managing computational demands.

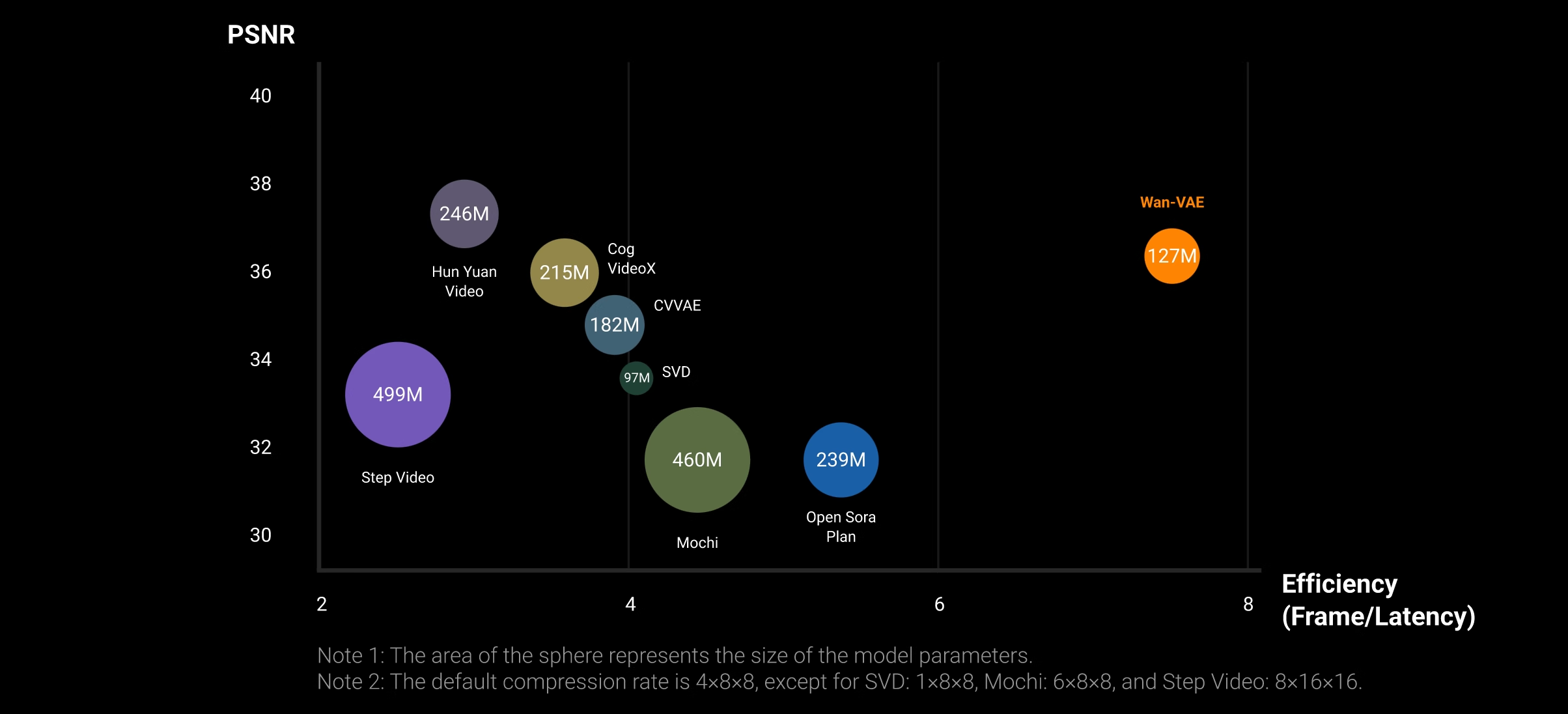

Wan 2.1 utilizes Spatio-Temporal Variational Autoencoders (ST-VAE), Diffusion Transformers, and Multilingual Support, making AI video creation accessible and versatile. Key components include:

The Three Warriors: Wan 2.1’s Model Variants

Wan 2.1 is trained on massive datasets using a six-stage process to achieve excellence in video generation, supported by a meticulous data filtering strategy to ensure top-tier quality.

Sora’s distinguishing feature is its fusion of diffusion models and transformers, treating videos as cohesive 4D structures. The model includes an internal physics engine that excels in producing lifelike videos, albeit occasionally with quirky errors.

Sora boasts an intuitive interface, complete with advanced tools like Remix, Recut, Loop, Blend, and Storyboard, catering to users across experience levels. It also offers a community-driven platform for collaboration and exploration.

Sora’s tiered subscription model provides options for casual users and professionals, with the Pro offering higher resolution and an array of advanced tools for a premium content creation experience.

Wan 2.1 presents a slightly imperfect yet realistic motion, while Sora delivers flawlessly smooth transitions, sometimes at the expense of human realism.

Wan 2.1’s cinematic output shines with dramatic color grading, whereas Sora provides polish but lacks emotive depth.

Wan 2.1 offers broader capabilities, including multi-task support and adaptability, while Sora focuses on high-fidelity, cloud-based video synthesis.

Wan 2.1: Thanks to its multilingual support and ability to render realistic motion physics, Wan 2.1 is ideal for creating content that resonates authentically across global platforms like TikTok and Instagram. It caters to diverse audiences with seamless adaptability to different contexts, making it perfect for product showcases and environmental simulations.

Sora: With an edge in artistic creativity, Sora excels in generating visually striking content that captivates audiences with unique stylistic elements. Its high-level artistic flair makes it particularly effective for creative storytelling and viral marketing campaigns on platforms like TikTok.

Best Choice: Wan 2.1 is best for creators seeking realism and multilingual accessibility, while Sora is optimal for those prioritizing artistic expression and narrative depth. Many creators might find value in utilizing both, depending on their creative goals.

Wan 2.1: With its capacity for generating complex, accurate scenes, Wan 2.1 is an excellent tool for visualizing action sequences and preproduction planning. Its open-source framework offers customization, a valuable asset in large-scale productions where unique details and tailored modifications are vital.

Sora: The Storyboard feature enables Sora to test narrative flows effectively, making it a powerful tool for directors exploring different styles and pacing. Its high-resolution output aids in visualizing end products closely, which is crucial in high-fidelity film and video production.

Best Choice: Wan 2.1 is well-suited for projects that require intensive scene detailing and custom modification, whereas Sora's storytelling capabilities make it ideal for narrative-driven film previsualization.

Wan 2.1: Excelling in dynamic product demos and scene simulation, Wan 2.1 provides marketing teams the flexibility to create diverse visuals efficiently within existing workflows, allowing for rapid production without extensive resources.

Sora: Sora’s strength lies in creating emotionally engaging content with high visual fidelity. It is especially attractive for luxury and high-end brands needing visually polished, brand-focused narratives for impactful advertising.

Best Choice: For creative storytelling and emotional engagement, Sora leads, while Wan 2.1 offers practical solutions for dynamic visualizations and brand demonstrations.

Wan 2.1: Due to its open-source nature, Wan 2.1 is a powerful tool for businesses seeking deep integration and the option to personalize applications to meet specific needs. API access furthers its utility for developers.

Sora: Its user-friendly cloud interface makes Sora appealing for companies lacking technical skills, supporting quick deployment without hardware constraints. Sora benefits from OpenAI’s ongoing updates, providing stable solutions for enterprises.

Best Choice: Tech-oriented companies may prefer Wan 2.1 for its integration potential, while Sora offers a more accessible, immediate solution for non-technical teams. Many larger enterprises might integrate both tools for varied requirements.

Throughout our detailed exploration, Wan 2.1 and Sora have demonstrated their individual strengths, each embodying distinct philosophies in the realm of AI video generation.

Two Models, Two Philosophies

Wan 2.1: Wan 2.1, developed by Alibaba, is built on the principles of openness and versatility. Its open-source framework makes it a beacon of collaboration and customization. This model excels in multi-language support, efficiently handles various tasks, and offers a broad range of functionalities, from text-to-video and image-to-video conversions to video editing and text-to-image generation. Wan 2.1’s focus on efficiency and flexibility makes it a top choice for developers and enterprises seeking to integrate AI video generation deeply into their systems.

Sora: On the other side, Sora by OpenAI embodies a polished, user-centric approach. Its closed-source model delivers a seamless user experience with sophisticated creative tools and high-definition outputs up to 1080p. The simplicity of deployment, thanks to its cloud-based service, alongside innovative editing capabilities like Remix and Storyboard, makes it particularly attractive for professional artists, filmmakers, and creative industries. However, its subscription-based access may pose a barrier to individual creators seeking affordable solutions.

Both Wan 2.1 and Sora reflect the current advancements and potential of AI video generation technology. Their differences illustrate the possibilities of open-source collaboration versus the refinement of exclusive innovation.

Wan 2.1 stands out for those who prioritize adaptability, cost-effectiveness, and multilingual inclusivity, making it ideal for a wide array of applications from enterprise solutions to grassroots creativity. Sora shines when advanced creativity, narrative coherence, and high-resolution visuals are central to a user’s needs, making it suitable for high-end content production and artistic endeavors. Choosing between them depends on whether you prioritize openness and customization or polished, high-quality outputs with extensive creative toolsets.

These models are pushing the boundaries of how AI can aid in storytelling, creating new paradigms for artistic expression and commercial applications alike. As AI technology continues to evolve, the future holds exciting possibilities for even more sophisticated and accessible video generation tools, continually reshaping how creators and enterprises produce visual content.

Both Wan 2.1 and Sora play integral roles in this transformation, highlighting our journey towards more vibrant, dynamic, and creative digital landscapes. Whether you lean toward the flexibility of Wan 2.1 or the polished experience of Sora, the key is to harness these tools to amplify human creativity, crafting stories and experiences that resonate and enthrall.

Chart 1: Performance Comparison on VBench

Table 1: Comparison of Wan 2.1 and Sora

| Feature | Wan 2.1 | Sora |

|---|---|---|

| Generation Quality | 86.22% on VBench | High realism, limited to 1 minute |

| Computational Efficiency | 8.2GB VRAM for 480P video | High computational demands |

| Technical Architecture | Diffusion Transformer + Wan-VAE | Diffusion Transformer |

| Application Scenarios | Multi-task support | Primarily video generation |

--